Publications

Interaction-Required Suggestions for Control, Ownership, and Awareness in Human-AI Co-Writing

Authors: Kenneth C. Arnold, Jiho Kim

Proceedings of the Fourth Workshop on Intelligent and Interactive Writing Assistants (In2Writing), NAACL 2025 • May 4, 2025

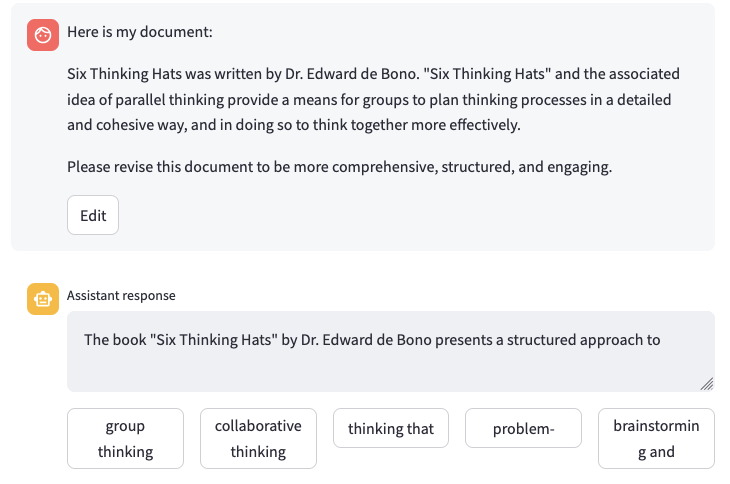

This paper explores interaction designs for generative AI interfaces that necessitate human involvement throughout the generation process. We argue that such interfaces can promote cognitive engagement, agency, and thoughtful decision-making. Through a case study in text revision, we present and analyze two interaction techniques: (1) using a predictive-text interaction to type the assistant's response to a revision request, and (2) highlighting potential edit opportunities in a document. Our implementations demonstrate how these approaches reveal the landscape of writing possibilities and enable fine-grained control. We discuss implications for human-AI writing partnerships and future interaction design directions.

Voice Interaction with Conversational AI Could Facilitate Thoughtful Reflection and Substantive Revision in Writing

Authors: Jiho Kim, Philippe Laban, Xiang 'Anthony' Chen, Kenneth C. Arnold

Proceedings of the Fourth Workshop on Intelligent and Interactive Writing Assistants (In2Writing), NAACL 2025 • May 4, 2025

Writing well requires not only expressing ideas but also refining them through revision, a process facilitated by reflection. Prior research suggests that feedback delivered through dialogues, such as those in writing center tutoring sessions, can help writers reflect more thoughtfully on their work compared to static feedback. Recent advancements in multi-modal large language models (LLMs) now offer new possibilities for supporting interactive and expressive voice-based reflection in writing. In particular, we propose that LLM-generated static feedback can be repurposed as conversation starters, allowing writers to seek clarification, request examples, and ask follow-up questions, thereby fostering deeper reflection on their writing. We argue that voice-based interaction can naturally facilitate this conversational exchange, encouraging writers' engagement with higher-order concerns, facilitating iterative refinement of their reflections, and reduce cognitive load compared to text-based interactions. To investigate these effects, we propose a formative study exploring how text vs. voice input influence writers' reflection and subsequent revisions. Findings from this study will inform the design of intelligent and interactive writing tools, offering insights into how voice-based interactions with LLM-powered conversational agents can support reflection and revision.

Towards Full Authorship with AI: Supporting Revision with AI-Generated Views

Authors: Jiho Kim, Ray C. Flanagan, Noelle E. Haviland, ZeAi Sun, Souad N. Yakubu, Edom A. Maru, Kenneth C. Arnold

HAI-GEN Workshop at ACM IUI 2024 • March 18, 2024

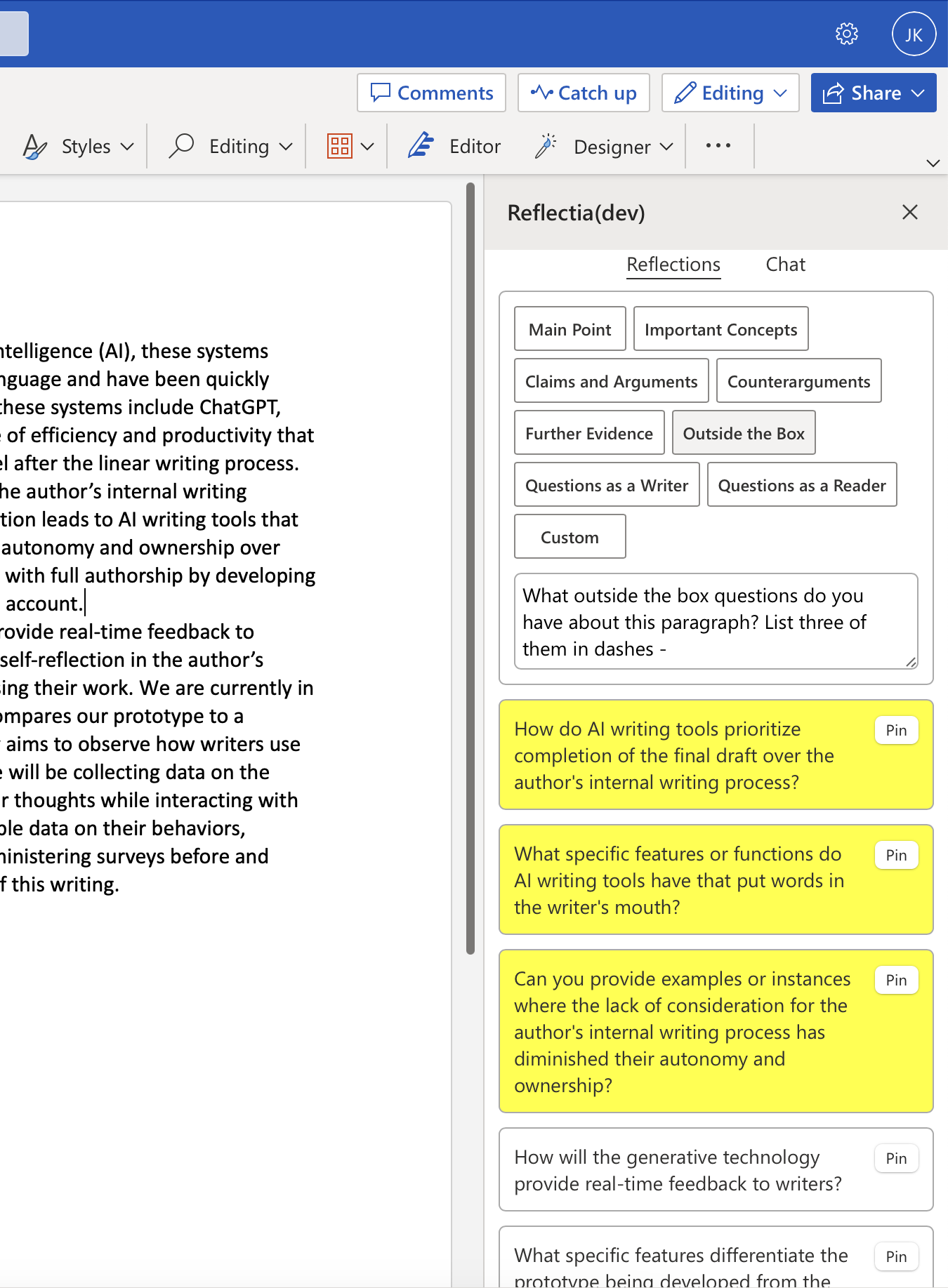

We introduce Textfocals, a UI prototype designed to investigate a human-centered approach that emphasizes the user's role in writing. Textfocals supports the writing process by providing LLM-generated summaries, questions, and advice in a sidebar, encouraging reflection and self-driven revision while maintaining full authorship.A formative user study with Textfocals showed promising evidence that this approach might help users develop underdeveloped ideas, cater to the rhetorical audience, and clarify their writing.

Promoting End-to-End Intentionality with Large Language Models

Authors: Jason Chew, Daniel Kim, Jiho Kim, Ray Flanagan, Kenneth C. Arnold

Calvin STEM Poster Fair • October 25, 2024

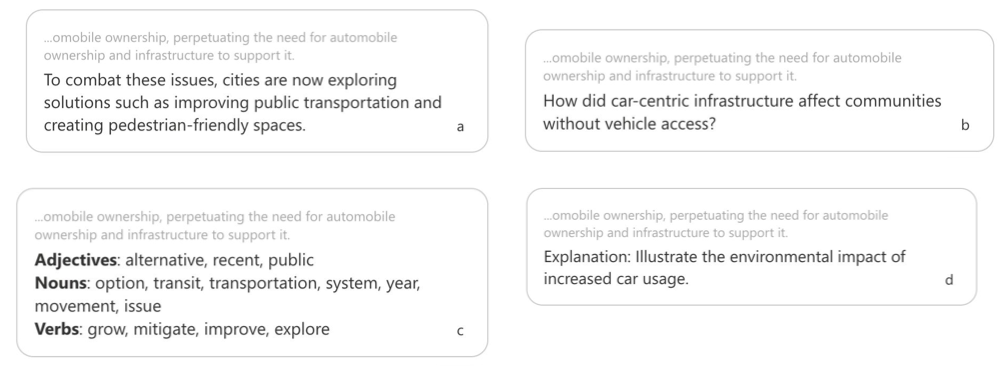

In Summer 2024, our team of undergraduate students conducted a study on how the type of information suggested by an AI writing assistant affects writers’ cognitive engagement with the suggestion and how they appropriate that suggestion in their draft. We developed a Microsoft Word sidebar that offered next-sentence suggestions expressed in four different ways: in addition to predictive-text-style examples they could use verbatim (e.g., by copy-and-paste), we also allowed writers to request questions that the next sentence might answer, vocabulary they might use, and rhetorical moves (such as giving examples or considering counterarguments) that their next sentence might engage with.

In a pilot study (N=8), writers found questions and rhetorical moves to be useful and friendly. Although writers chose to request examples more often, they often rejected the suggested text. Overall, they rated the Questions suggestion type as most compelling in post-task surveys, followed by Examples. These preliminary results suggest that writers welcomed AI suggestions that could not be inserted verbatim into their documents but instead required further thought. Overall, by offering intentionally-incomplete suggestions like Questions or Rhetorical Moves, AI systems might become better cognitive partners for writers, enriching thinking rather than circumventing it. This work has been presented at an internal poster fair; we are designing a follow-up experiment to build on these findings for broader publication.